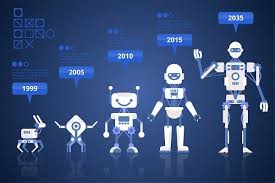

The Evolution of Artificial Intelligence

Artificial Intelligence (AI) has transformed from a speculative idea in science fiction to a transformative technology that permeates many aspects of daily life. Understanding the evolution of AI involves tracing its historical roots, examining key developments, and contemplating its future implications across various fields.

Early Concepts and Foundations

The concept of artificial intelligence dates back to ancient history, where myths and legends featured automata and intelligent beings. However, the formal groundwork for AI was laid in the mid-20th century. In 1956, the Dartmouth Conference marked a pivotal moment in AI history. Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, this conference brought together researchers who believed that human intelligence could be replicated through machines.

During this period, early AI research focused on symbolic approaches, where human knowledge was represented using rules and logic. Programs like the Logic Theorist and General Problem Solver aimed to mimic human reasoning by solving mathematical problems and logical tasks. However, the limitations of these early systems became evident, as they struggled with real-world complexities and ambiguity.

The Rise of Machine Learning

By the 1980s, a shift occurred with the emergence of machine learning, a subset of AI that enables systems to learn from data rather than relying solely on pre-defined rules. This paradigm shift was catalyzed by advancements in algorithms, statistical methods, and the availability of larger datasets.

One of the significant breakthroughs was the development of neural networks, which are designed to simulate the way the human brain processes information. The introduction of backpropagation in the 1980s allowed for the training of multi-layered neural networks, paving the way for more sophisticated AI applications.

Despite these advances, AI research faced challenges, including the “AI winter” periods of reduced funding and interest due to unmet expectations. However, by the late 1990s and early 2000s, renewed interest arose, driven by improvements in computing power, the advent of the internet, and the proliferation of data.

The Big Data Revolution

The explosion of data generated from digital interactions, sensors, and devices created an unprecedented opportunity for AI. The concept of “big data” emerged, emphasizing the importance of analyzing vast amounts of information to derive insights. Machine learning algorithms, particularly deep learning, became the cornerstone of AI applications during this era.

Deep learning, which involves training large neural networks with multiple layers, revolutionized fields such as computer vision and natural language processing. Breakthroughs like Google’s AlphaGo defeating world champion Go player Lee Sedol in 2016 showcased the capabilities of AI in complex decision-making environments. Similarly, advancements in image and speech recognition led to the proliferation of AI applications in everyday technology, from virtual assistants like Siri and Alexa to facial recognition systems.

AI in the Modern Era

Today, AI is embedded in a myriad of applications across diverse sectors. In healthcare, AI systems assist in diagnosing diseases, analyzing medical images, and personalizing treatment plans. In finance, algorithms are employed for fraud detection, risk assessment, and automated trading. The automotive industry is undergoing a revolution with the development of self-driving cars, which rely on AI to navigate and make real-time decisions.

Moreover, AI is transforming industries like manufacturing, where robotics and automation improve efficiency and precision. In education, adaptive learning platforms utilize AI to tailor educational experiences to individual student needs, enhancing engagement and outcomes.

Ethical Considerations and Future Challenges

As AI continues to advance, ethical considerations have become increasingly important. Concerns regarding privacy, bias, and accountability are at the forefront of discussions about AI deployment. For instance, biased data can lead to discriminatory outcomes in AI algorithms, raising questions about fairness and transparency.

Additionally, the potential for job displacement due to automation poses economic and social challenges. While AI can enhance productivity, it also necessitates a reevaluation of workforce skills and job roles. Preparing for a future where AI and humans coexist requires proactive measures in education and workforce development.

The Future of AI

Looking ahead, the future of AI is both promising and complex. Research continues to push the boundaries of what AI can achieve, with developments in areas such as explainable AI, which seeks to make AI decision-making more transparent and interpretable. The integration of AI with other technologies, such as the Internet of Things (IoT) and quantum computing, holds the potential to unlock new capabilities and applications.

Moreover, as AI becomes more pervasive, ongoing dialogue about its ethical implications will be crucial. Establishing frameworks for responsible AI development and deployment can help mitigate risks while maximizing benefits.

Conclusion

The evolution of artificial intelligence reflects a remarkable journey marked by innovation, setbacks, and transformative breakthroughs. From its conceptual origins to its current applications across various domains, AI has the potential to redefine our world. As we move forward, the challenge lies not only in advancing the technology but also in ensuring it is developed and utilized ethically and responsibly. The future of AI holds immense possibilities, and navigating this landscape will require collaboration, foresight, and a commitment to the common good.